Sample preparation is an essential part of method development and is critical to successful analytical determinations. With cannabis and cannabis products, the analyst is faced with a very challenging matrix and targets that may range from trace level through percent level thus placing considerable demands on the sample preparation techniques.1 The optimal sample preparation, or “extraction”, method for potency analysis of cannabis flower was determined using a methanol extraction coupled with filtration using regenerated cellulose filters.

In the United States (US), Canada, and other countries where medicinal and/or adult recreational cannabis has been legalized, regulatory entities require a panel of chemical tests to ensure quality and safety of the products prior to retail sales2. Cannabis testing can be divided into two different categories: Quality and Safety. Quality testing, which includes potency analysis (also known as cannabinoid testing or cannabinoid content), is performed to analyze the product in accordance with the producer/grower expectations and government regulations. Safety testing is conducted under regulatory guidelines to ensure that consumers are not exposed to toxicants such as pesticides, mycotoxins, heavy metals, residual solvents and microbial contaminates.

Potency testing evaluates the total amount of cannabinoid content, specifically focusing on tetrahydrocannabinol (THC) and cannabidiol (CBD). In the US, the biggest push for accurate total THC is to differentiate between hemp (legally grown for industrial or medicinal use), which is defined as cannabis sativa with a THC limit ≤ 0.3 %, and cannabis (Cannabis spp.), which is any cannabis plant with THC measured above 0.3 %3. Potency testing is typically performed by liquid chromatography (LC) with UV detection to determine the quantity of major cannabinoids.

In addition to reporting THC and CBD, their respective precursors are also important for reporting total potency. Tetrahydrocannabinolic acid (THCA) is the inactive precursor to THC while cannabidiolic acid (CBDA) is the precursor to CBD.4,5

Methods and Materials

Sample Preparation

All samples were homogenized using an immersion blender with a dry material grinder. The nominal sample amounts were 200 mg of flower, 500 mg of edibles, and 250 mg of candy samples.

Potency Extraction Method (1)

Twenty milliliters (mL) of methanol (MeOH) was added to each sample. The samples were mechanically shaken for 10 minutes and centrifuged for 5 minutes.

Potency Extraction Method (2)

Ten mL of water was added to each sample. The samples were mechanically shaken for 10 minutes. 20 mL of acetonitrile (ACN) was then added to each sample and vortexed. An EN QuEChERS extraction salt packet was added to the sample. The samples were placed on a mechanical shaker for 2 minutes and then centrifuged for 5 minutes.

Each extract was split and evaluated with two filtration/cleanup steps: (1) a regenerated cellulose (RC) syringe filter (Agilent Technologies, 4 mm, 0.45 µm); (2) a PFTE syringe filter (Agilent Technologies, 4 mm, 0.45 µm). The final filtered extracts were injected into the ultra-performance liquid chromatograph coupled with a photodiode array detector (UPLC-PDA) for analysis.

Calibration

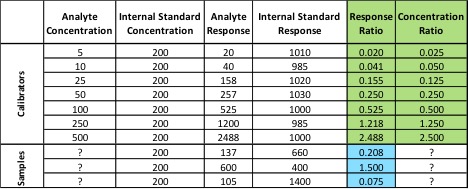

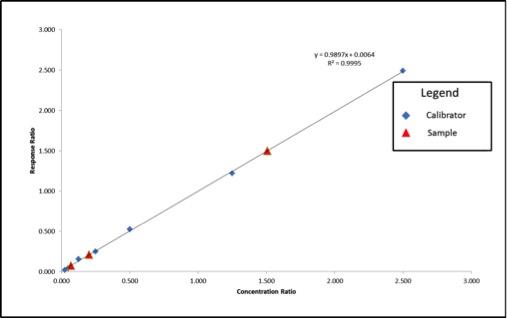

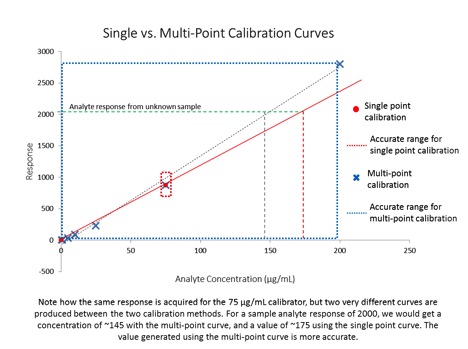

Standards were obtained for the following cannabinoids at a concentration of 1 mg/mL: cannabidivarin (CBDV), tetrahydrocannabivarin (THCV), cannabidiol (CBD), cannabigerol (CBG), cannabidiolic acid (CBDA), cannabigerolic acid (CBGA), cannabinol (CBN), tetrahydrocannabinol (9-THC), cannabichromene (CBC), tetrahydrocannabinol acid (THCA). Equal volumes of each standard were mixed with MeOH to make a standard stock solution of 10 ug/mL. Serial dilutions were made from the stock to make concentrations of 5, 1, and 0.5 ug/mL for the calibration curve (Figure 1).

Instrumental Method

All instrument parameters were followed from Agilent Application Note 5991-9285EN.8 A UPLC with a PDA (Waters Corp, Milford, MA) detector was employed for potency analysis. An InfinityLab Poroshell 120 EC-C18, 3.0 x 50 mm, 2.7 um column (Agilent Technologies, Wilmington, DE) was utilized for compound separation. The organic mobile phase composition was 0.05 % (v/v) formic acid in HPLC grade MeOH and the aqueous mobile phase composition was 0.1 % (v/v) formic acid in HPLC grade water. The mobile phase gradient is shown in Table 1. The flow rate was 1 mL/min (9.5 minute total program), injection volume was 5 uL, and column temperature was 50 °C.

Discussion and Results

Table 2 summarizes the relative standard deviations (% RSD) were found for the THC calibrator (at 1 ug/mL) and one extract of a homogeneous sample (utilizing 7 replicates).

The cannabinoid potency of various cannabis plant and cannabis product samples were determined for the various extraction techniques In the chromatograms THC was observed ~8.08 minutes and CBD was observed ~4.61 minutes (Figure 2).

Total potency for THC & CBD were calculated for each sample using the equations below. Equation 1 was used because it accounts for the presence of THCA as well as the specific weight difference between THC and THCA (since THCA will eventually convert to THC, this needs to be accounted for in the calculations).![]()

Table 3 shows the % THC and the total THC potency values calculated for the same flower samples that went through all four various potency sample preparation techniques as described earlier. Figure 3 also provides LC chromatograms for flower sample 03281913A-2 and edible sample 03281912-1.

The results indicated that with the “Potency Extraction Method 2” (ACN/QuEChERS extraction) coupled with the RC filter provided a bias of 7.29 % greater for total THC % over the other extraction techniques. Since the other 3 techniques provided total THC values within 2% of each other, the total THC of the sample is more likely ~14%.

Since the sample dilution for the above data set reduced the CBD content, an undiluted sample was run and analyzed. This data is reported in Table 4.

The CBD results indicated that with the “Potency Extraction Method 1” (methanol extraction) coupled with RC filter, allowed for a greater CBD recovery. This may indicate the loss of CBD with an ACN/QuEChERS extraction.

With an average ~14% total THC and 0.06% total CBD for a homogenous cannabis flower sample, the optimal sample preparation extraction was determined to be a methanol extraction coupled with filtration using a regenerated cellulose filter. Since potency continues to remain at the forefront of cannabis regulatory testing it is important to utilize the right sample prep for your cannabis samples.

References

- Wang M, Wang YH, Avula B, Radwan MM, Wanas AS, Mehmedic Z, et al. Quantitative Determination of Cannabinoids in Cannabis and Cannabis Products Using Ultra-High-Performance Supercritical Fluid Chromatography and Diode Array/Mass Spectrometric Detection. Journal of Forensic Sciences 2016;62(3):602-11.

- Matthew Curtis, Eric Fausett, Wendi A. Hale, Ron Honnold, Jessica Westland, Peter J. Stone, Jeffery S. Hollis, Anthony Macherone. Cannabis Science and Technology, September/October 2019, Volume 2, Issue 5.

- Sian Ferguson. https://www.healthline.com/health/hemp-vs-marijuana. August 27, 2020.

- Taschwer M, Schmid MG. Determination of the relative percentage distribution of THCA and 9-THC in herbal cannabis seized in Austria- Impact of different storage temperatures on stability. Forensic Science International 2015; 254:167-71.

- Beadle A. CBDA Vs CBD: What are the differences? [Internet]. Analytical Cannabis. 2019 [cited 2020 Apr 22]; https://www.analyticalcannabis.com/articles/cbda-vs-cbd-what-are-the-differences-312019.

- Storm C, Zumwalt M, Macherone A. Dedicated Cannabinoid Potency Testing Using the Agilent 1220 Infinity II LC System. Agilent Technologies, Inc. Application Note 5991-9285EN