Almost as soon as cannabis became recreationally legal, the public started to ask questions about the safety of products being offered by dispensaries – especially in terms of pesticide contamination. As we can see from the multiple recalls of product there is a big problem with pesticides in cannabis that could pose a danger to consumers. While The Nerd Perspective is grounded firmly in science and fact, the purpose of this column is to share my insights into the cannabis industry based on my years of experience with multiple regulated industries with the goal of helping the cannabis industry mature using lessons learned from other established markets. In this article, we’ll take a look at some unique challenges facing cannabis testing labs, what they’re doing to respond to the challenges, and how that can affect the cannabis industry as a whole.

The Big Challenge

Over the past several years, laboratories have quickly ‘grown up’ in terms of technology and expertise, improving their methods for pesticide detection to improve data quality and lower detection limits, which ultimately ensures a safer product by improving identification of contaminated product. But even though cannabis laboratories are maturing, they’re maturing in an environment far different than labs from regulated industry, like food laboratories. Food safety testing laboratories have been governmentally regulated and funded from almost the very beginning, allowing them some financial breathing room to set up their operation, and ensuring they won’t be penalized for failing samples. In contrast, testing fees for cannabis labs are paid for by growers and producers – many of whom are just starting their own business and short of cash. This creates fierce competition between cannabis laboratories in terms of testing cost and turnaround time. One similarity that the cannabis industry shares with the food industry is consumer and regulatory demand for safe product. This demand requires laboratories to invest in instrumentation and personnel to ensure generation of quality data. In short, the two major demands placed on cannabis laboratories are low cost and scientific excellence. As a chemist with years of experience, scientific excellence isn’t cheap, thus cannabis laboratories are stuck between a rock and a hard place and are feeling the squeeze.

Responding to the Challenge

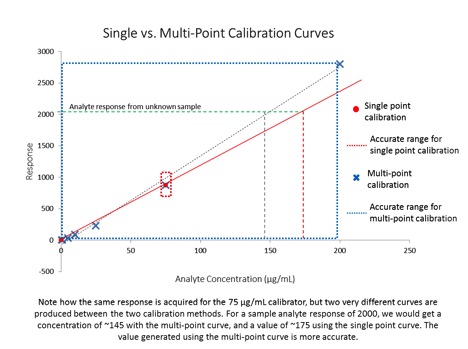

One way for high-quality laboratories to win business is to tout their investment in technology and the sophistication of their methods; they’re selling their science, a practice I stand behind completely. However, due to the fierce competition between labs, some laboratories have oversold their science by using terms like ‘lethal’ or ‘toxic’ juxtaposed with vague statements regarding the discovery of pesticides in cannabis using the highly technical methods that they offer. This juxtaposition can then be reinforced by overstating the importance of ultra-low detection levels outside of any regulatory context. For example, a claim stating that detecting pesticides at the parts per trillion level (ppt) will better ensure consumer safety than methods run by other labs that only detect pesticides at concentrations at parts per billion (ppb) concentrations is a potentially dangerous claim in that it could cause future problems for the cannabis industry as a whole. In short, while accurately identifying contaminated samples versus clean samples is indeed a good thing, sometimes less isn’t more, bringing us to the second half of the title of this article.

Less isn’t always more…

In my last article, I illustrated the concept of the trace concentrations laboratories detect, finishing up with putting the concept of ppb into perspective. I wasn’t even going to try to illustrate parts per trillion. Parts per trillion is one thousand times less concentrated than parts per billion. To put ppt into perspective, we can’t work with water like I did in my previous article; we have to channel Neil deGrasse Tyson.

The Milky Way galaxy contains about 100 billion stars, and our sun is one of them. Our lonely sun, in the vastness of our galaxy, where light itself takes 100,000 years to traverse, represents a concentration of 10 ppt. On the surface, detecting galactically-low levels of contaminants sounds wonderful. Pesticides are indeed lethal chemicals, and their byproducts are often lethal or carcinogenic as well. From the consumer perspective, we want everything we put in our bodies free of harmful chemicals. Looking at consumer products from The Nerd Perspective, however, the previous sentence changes quite a bit. To be clear, nobody – nerds included – wants food or medicine that will poison them. But let’s explore the gap between ‘poison’ and ‘reality’, and why that gap matters.

In reality, according to a study conducted by the FDA in 2011, roughly 37.5% of the food we consume every day – including meat, fish, and grains – is contaminated with pesticides. Is that a good thing? No, of course it isn’t. It’s not ideal to put anything into our bodies that has been contaminated with the byproducts of human habitation. However, the FDA, EPA, and other governmental agencies have worked for decades on toxicological, ecological, and environmental studies devoted to determining what levels of these toxic chemicals actually have the potential to cause harm to humans. Rather than discuss whether or not any level is acceptable, let’s take it on principle that we won’t drop over dead from a lethal dose of pesticides after eating a salad and instead take a look at the levels the FDA deem ‘acceptable’ for food products. In their 2011 study, the FDA states that “Tolerance levels generally range from 0.1 to 50 parts per million (ppm). Residues present at 0.01 ppm and above are usually measurable; however, for individual pesticides, this limit may range from 0.005 to 1 ppm.” Putting those terms into parts per trillion means that most tolerable levels range from 100,000 to 50,000,000 ppt and the lower limit of ‘usually measurable’ is 10,000 ppt. For the food we eat and feed to our children, levels in parts per trillion are not even discussed because they’re not relevant.

In reality, according to a study conducted by the FDA in 2011, roughly 37.5% of the food we consume every day – including meat, fish, and grains – is contaminated with pesticides. Is that a good thing? No, of course it isn’t. It’s not ideal to put anything into our bodies that has been contaminated with the byproducts of human habitation. However, the FDA, EPA, and other governmental agencies have worked for decades on toxicological, ecological, and environmental studies devoted to determining what levels of these toxic chemicals actually have the potential to cause harm to humans. Rather than discuss whether or not any level is acceptable, let’s take it on principle that we won’t drop over dead from a lethal dose of pesticides after eating a salad and instead take a look at the levels the FDA deem ‘acceptable’ for food products. In their 2011 study, the FDA states that “Tolerance levels generally range from 0.1 to 50 parts per million (ppm). Residues present at 0.01 ppm and above are usually measurable; however, for individual pesticides, this limit may range from 0.005 to 1 ppm.” Putting those terms into parts per trillion means that most tolerable levels range from 100,000 to 50,000,000 ppt and the lower limit of ‘usually measurable’ is 10,000 ppt. For the food we eat and feed to our children, levels in parts per trillion are not even discussed because they’re not relevant.

A specific example of this is arsenic. Everyone knows arsenic is very toxic. However, trace levels of arsenic naturally occur in the environment, and until 2004, arsenic was widely used to protect pressure-treated wood from termite damage. Because of the use of arsenic on wood and other arsenic containing pesticides, much of our soil and water now contains some arsenic, which ends up in apples and other produce. These apples get turned into juice, which is freely given to toddlers everywhere. Why, then, has there not an infant mortality catastrophe? Because even though the arsenic was there (and still is), it wasn’t present at levels that were harmful. In 2013, the FDA published draft guidance stating that the permissible level of arsenic in apple juice was 10 parts per billion (ppb) – 10,000 parts per trillion. None of us would think twice about offering apple juice to our child, and we don’t have to…because the dose makes the poison.

How Does This Relate to the Cannabis Industry?

The concept of permissible exposure levels (a.k.a. maximum residue limits) is an important concept that’s understood by laboratories, but is not always considered by the public and the regulators tasked with ensuring cannabis consumer safety. As scientists, it is our job not to misrepresent the impact of our methods or the danger of cannabis contaminants. We cannot understate the danger of these toxins, nor should we overstate their danger. In overstating the danger of these toxins, we indirectly pressure regulators to establish ridiculously low limits for contaminants. Lower limits always require the use of newer testing technologies, higher levels of technical expertise, and more complicated methods. All of this translates to increased testing costs – costs that are then passed on to growers, producers, and consumers. I don’t envy the regulators in the cannabis industry. Like the labs in the cannabis industry, they’re also stuck between a rock and a hard place: stuck between consumers demanding a safe product and producers demanding low-cost testing. As scientists, let’s help them out by focusing our discussion on the real consumer safety issues that are present in this market.

*average of domestic food (39.5% contaminated) and imported food (35.5% contaminated)